GoogieHost is providing you with a way for SERP robots to understand and interpret your website easily!

Sounds confusing, right? But yes, GoogieHost has come up with a robots.txt generator through which you can determine where your website should be working, showing, what it should be following, and whether you want it indexed; if yes, then which platforms? It all comes under the robot.txt generator, which is free by GoogieHost.

It’s basically a file that you create for the website to follow some instructions and basically what robots can interpret and follow. Overall, it tells the search engines to follow some particular pages and not follow others, the ones that you do not want to get indexed because of any personal or static reason.

A robots.txt file instructs search engine crawlers which URLs on your website they can access.

This is mostly intended to prevent your website from becoming overloaded with queries, but it is not a method of keeping a website off Google but only to omit some pages to be crawled by the search engines.

There’s a simple difference between Sitemap and Robots.txt. A sitemap is like a map that directs the search engines to all website pages.

Whereas, Robots.txt ensures that it bifurcates the pages you want the search engine to crawl into and the ones you want to omit. One does so to decrease traffic and hide not very important pages at the start.

Yes, the robots.txt file is necessary if you do not want some backend or even frontend pages to be indexed before the public by search engines.

The reason for not getting those pages in front can be different, but it all depends on your needs and requirements.

Because if this is not done, the robots will crawl and index the pages as they normally do, without neglecting anything, but if you want some things to be neglected and hidden back. This tool is what you’ll need! Also find free word cloud generator for visual word in cloud from.

We have given the steps to create a robot.txt file:

Visit our site and look for the Free Robots.txt Generator

Fill in the required details

Robots that you want to allow

Crawl-Delay

Sitemap link (if you have any for that website)

Search Robots that you want to allow or deny

Restricted Directories

Create ‘Robots.txt’ and save it if you want to

Tada! You’ll get the results within seconds!

Robots.txt generator not only makes the robots not index some pages from your website (the ones that you restrict), but it also ensures the website’s smooth working by not getting the site overloaded with the unnecessary presence of robots and traffic.

Robots.txt works to make your website SEO-optimized by neglecting the pages you don’t want to index, which also neglects unnecessary traffic and overloading.

Yes, It’s important for SEO because it helps in not indexing some pages that the user does not want to index and neglecting overloading the website with teh help of it.

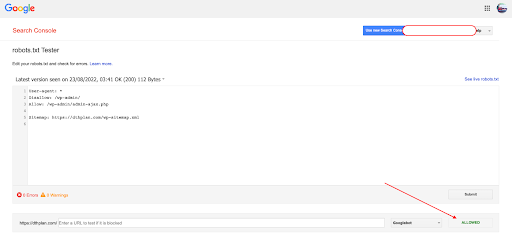

All you have to do is Open the Google Tester for Robots.txt under the Search Console. You will find an option of “Add Property Now”.

Under that section, you will find the sites you have in your console. Select teh one site you want to test and click “Test”.

You will get the results in seconds. It’ll look something like this: